We have already seen Tutorial Primary Index and we have understood how Primary Index work and how they us in maximizing performance.

Today we will be learning on how to choose Primary Index/Indexes in a given table. But before we move ahead in defining the criteria for choice of Primary Index, here is a Tip:

TIP

If you don't define any Index on a Table, then Teradata decides on its own. Its makes decision on the below points:

1. If you have any column with Primary Key Constraint in the table definition, Teradata will make column as Unique Primary Index.

2. If you have a/many columns with Unique Constraint, then Teradata will choose only the 1st column in the table definition as Unique Primary Index & others as Unique Secondary Index.

3. If you don't have column with defined as either Primary key or with Unique Constraint, then Teradata makes the 1st column in the table definition as Non Unique Primary Index.

Primary Index Choice Criteria

Now lets start with the Criteria's of Choosing a good Primary Index. Basically, there are three Primary Index Choice Criteria: Access Demographics, Distribution Demographics, and Volatility.Access Demographics

By Access Demographics we mean, those columns which were used by the user to access the table, i.e. columns used in the WHERE clause of the SQL Statement. So choose the column(s) which were most frequently used for access to maximize the number of one-AMP operation. We need to consider both value as well as join access

Distribution Demographics

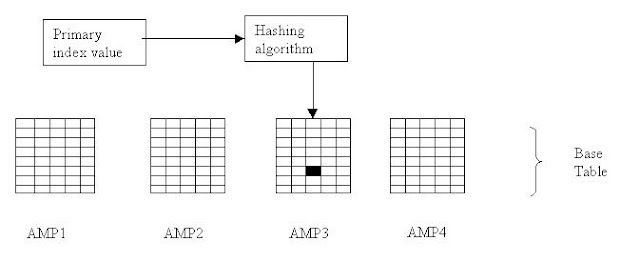

So as we know from our previous post on Primary Indexes, More unique the index, the better the distribution. Optimizing distribution optimizes parallel-processing.

Volatilty

You must understood if you know the meaning of the word Volatile. This point means that we have to choose a column which will have a low change rate. The Primary Index should not at all be volatile because any changes in the PI will result in heavy I/O overhead i.e. as a result in change the PI has to be moved from one AMP to another. Therefore, we have to choose a column which will have stable values.

**Note**

There is a trade-off between the access & distribution demographics. The most desirable situation is to find a column which has a good access and good distribution demographics.